Mihir Agarwal

MS Data Science @ Columbia University • Previously: Shell / Vidrona / ISEP • learn ⇄ implement ⇄ optimize

New York, NY

ma4874@columbia.edu

Hello! Welcome to my personal archive. 👋

I am a Master’s student at Columbia University, focusing on the intersection of Deep Learning and Systems Engineering. My goal is to build systems that are not only theoretically sound but computationally efficient at scale.

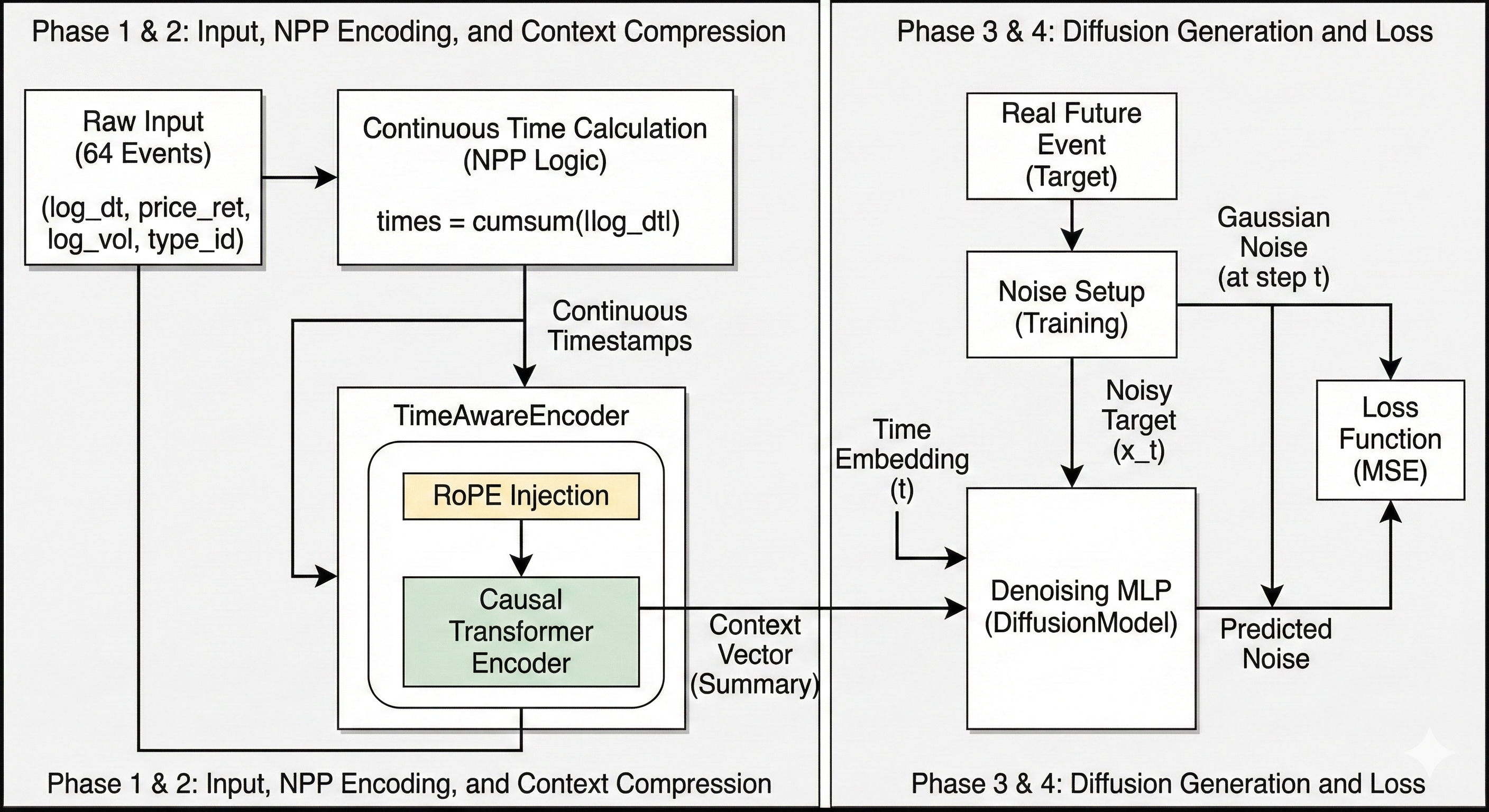

My recent research centers on architectural efficiency in generative modeling. I focus on optimizing attention mechanisms (e.g., Relative vs. Linear) to handle long-range dependencies and developing neuro-symbolic frameworks that integrate Neural Point Processes with Diffusion Models to capture complex continuous-time dynamics.

Professional Background

I approach research with a strong engineering discipline, honed during my three years as a Software Engineer at Shell. There, I engineered ML-driven automation pipelines and optimized large-scale testing systems, successfully reducing execution overhead by 35%.

My research journey began at ISEP (Paris), where I co-authored a Springer Journal paper on ML based resource management for distributed systems (Fog Computing). I also have experience deploying computer vision models for industrial drone inspections at Vidrona (London).

What’s Next

I am actively looking to apply my engineering experience to theoretical challenges. I am also open to industrial opportunities for Summer 2026.

I am always open to discussing technical challenges or potential collaborations.

Selected Projects

| Music Transformer Comparing Vanilla Attention vs. Relative Attention for MIDI generation. |

|---|---|

| NeuroLOB Generative Market Intelligence using Neural Point Processes & Diffusion. Live Demo |

| Real-Time Collaborative Code Editor A low-latency distributed system for live coding interviews with AI assistance. Live Demo' |

Experience

| Shell (Bengaluru, India) Software Engineer (Aug 2022 - Aug 2025)

|

|---|---|

| ISEP (Paris) Researcher (Jan 2022 - Aug 2022) Co-authored Springer paper on ML-based resource management to optimize Fog Computing latency. |

| Vidrona (London) Machine Learning Intern (July 2020 - Aug 2022) Deployed YOLO/Faster R-CNN models for automated inspections, saving 300+ manual hours weekly. |

selected publications

- SpringerMachine learning-based solutions for resource management in fog computingMultimedia Tools and Applications, 2023